Tools I love - My development stack 2023

[ python productivity linux ] · 3 min read

The list is not exhaustive, but here we go

bash

I love working with the Windows Subsystem for Linus (WSL). I tried fish shell for years, but switched back to bash.

If you want to get familar visit [1], [2]

Examples I run in bash all the time:

export PYTHONPATH=$PYTHONPATH:src

or this. And yes, I do not use vim (yet 😅).

nano ~/.bashrc

Starship prompt

I am a commandline style of guy. Already as a young guy I was tweeking my settings in Counter-Strike using config (.cfg) files. I you are like me you will love the upgrade of your prompt.

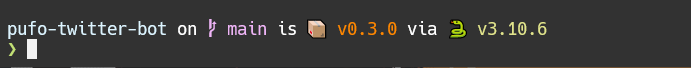

A little preview how it looks for my python project:

Excel

This one deserves a post on its own. Coming from Finance and been working in a large Cooperation I have seen quite a lot of impressive excel sheets in my time. Also I can say I developed quite good skills myself.

I will dedicate another post to excel. For inspiration check out You Suck at Excel with Joel Spolsky:

pyenv

I use pyenv to handle different python versions. Also quite popular is nodevn.

pipx

Let the pipx claim speak for itself:

pipx — Install and Run Python Applications in Isolated Environments

And it does exactly that. It is awesome. I like to install commandline tools like black or poetry with pipx.

Example of looking at what is globally installed:

$ pipx list --short

black 18.9b0

pipx 0.10.0

One more interesting command is the pipx inject functionality.

It allows to install packages inside the virtual environment specified.

I mainly use it to set up my jupyter environment like this:

$ pipx inject jupyterlab numpy

This allows me to use numpy inside jupyter-lab (using the default kernel).

poetry

It handles dependencies and the python packeting. To keep it short: poetry is the modern successor of setuptools.

It has an awesome documentation, is fast and simply superior to conda or miniconda.

That said, at work we default to a mix of using miniconda (as the python interpreter) and poetry as the dependency manager. I found that there can be a usecase for this, but this answer on stackexchange explains is best.

Jupyter-Lab

As a Data Scientist working with notebooks is the defactor way to start and explore any data set. Also you get quite used to it if you start any learning path, either on google, kaggle or anywhere else.

Here is a magic command I almost always put at the beginning of my notebooks:

%load_ext autoreload

%autoreload 2

It auto-reloads modules before executing code. Might not seem optimized for CPU usage, but works wonders when creating your own modules (or importing ever-changing functions from .py files)